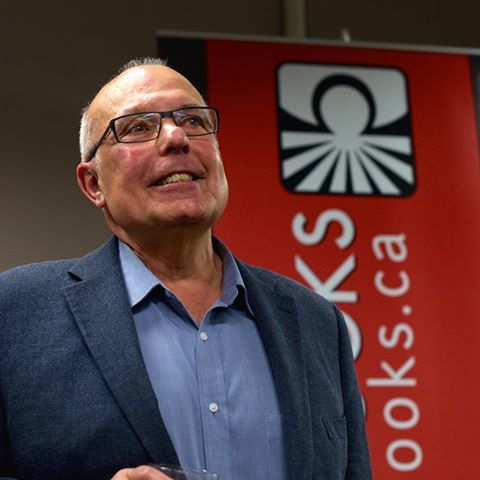

How the ranks are thinning! On Saturday, I was shocked to hear of the sudden death of another towering figure from the intellectual generation I suppose I am part of: Vincent (‘Vinny’) Mosco. Perhaps best known for his foundational importance in the study of the political economy of communication, his influence is so ubiquitous it is difficult to pinpoint the moment I first became aware of it, though it was most probably in the 1990s.

My earliest strong recollections are of a conference called Citizens at the Crossroads: Whose Information Society? held at the University of Western Ontario in 1999, which brought together a strange mix of government policy-makers, academics and people we were learning to refer to as representatives of ‘civil society’ from across Canada and elsewhere, memorable for me if no other reason than it was where I first got to know Leslie Regan Shade. As with so many conferences I can remember almost nothing of the formal speeches (except for the nice way that Quebecois presenters who spoke in French presented their powerpoint slides in English, and vice versa). But I do remember deep and interesting conversations with people from a wide range of different backgrounds. One thread that seemed to hold them all together, whether they were interested in feminism, in Marxist theory, in trade union strategy or in government telecommunications policy (and however much they might disagree with each other) was a universal affection and admiration for Vinny Mosco, whose name seemed to surface one way or another in just about every conversation.

Over the next few years it became clear that, almost single-handedly, he had helped birth , either directly, as their PhD supervisor, or indirectly, through his lecturing and writing, a generation of academic researchers who combine serious, often pathbreaking, scholarship with an equally serious commitment to workers and their interests, including, to name but a few, Enda Brophy, Ellen Balka, Andrew Clement, Tanner Mirlees, Nicole Cohen and of course, Leslie Regan Shade. And that’s just in Canada. He has fans across the world, from California to Beijing, where he held a visiting professorship. What is the explanation for this universal respect and gratitude?

Perhaps his understanding of labour, and the ways in which it both shapes and is shaped by technology, can be traced back to his childhood. Vinny was raised in a class-conscious household in a tenement in New York’s Little Italy where (to his enormous pride) a street is now named after his father. Supported by his family, he won entry by competitive examination to one of New York’s top public high schools and from there progressed via Georgetown University in Washington to Harvard, where he had completed his PhD in Sociology by the age of 27. It is clear that he never forgot his roots, while excelling academically and, unusually, managed to integrate these two aspects of his identity in a comfortable way that was highly productive in enabling him always to hold in view the labour that underlies any form of communication, however apparently abstract, and to integrate it into theory. This also, I suspect, gave him a kind of empathy with students, regardless of background, that might explain his huge personal and intellectual generosity to them and, in turn, their loyalty to him.

He was also highly unusual among the leading intellectuals of his generation in being supportive of women scholars and open to integrating a feminist perspective into his political economic theory. Here, perhaps, the influence of his wife, the brilliant journalist and scholar Catherine McKercher (whom he always referred to as ‘the love of my life’) might have played an important part. They were collaborators in work as well as life, co-editing to my knowledge at least two books and many articles in a rare form of mutual enrichment.

When I founded the journal Work Organisation, Labour and Globalisation in 2006, Vinny was one of my strongest supporters. Right from the first issue, he contributed articles (sometimes co-authored with Cathy or with a PhD student) and made helpful recommendations. Along with Cathy, he also co-edited a special issue on communications workers and global value chains, which attracted a range of contributions from around the world that was in itself a testimony to the breadth of their influence. And right up to the end of his life he was one the most reliable reviewers, arriving at perspicacious verdicts with lightning speed when others couldn’t agree.

I have another vivid memory of a conference, this time organised by myself, in 2014 where (there being little else in the way of attraction for foreign visitors in Hatfield, where my then employer was based) we had a grand dinner in the banqueting hall of Hatfield House, where Queen Elizabeth 1st was brought up. Having given a rip-roaring keynote speech to open the event, it was Vinny who leapt to his feet to propose an impromptu toast at the end of the meal (it felt like a gust of clear air, as if the New York working class was taking on the Tudor ghosts).

He adored his family – two daughters, a granddaughter and grandson – whose grief, along with Cathy’s, must right now be hard to deal with such is the scale of what they have lost. I hope it will be some consolation to them to know that his legacy will live on. And here I do not just mean his considerable intellectual legacy as the grandfather of the ‘political economy of communication’ and his insistence on recognising the central role of workers in the development and implementation of new technologies but also his kindness and principled personal values which provided a model for future generations of teachers. He will be hugely missed.